I guess we all agree that one of the biggest stoppers to get a contribution out is the ability to get the system ready to start working on the contribution. Today I want to talk a bit about generating Android binaries from our machine.

In the KDE Edu sprint we had the blatant realisation that it’s very frustrating to keep pushing the project while not being swift at delivering fresh packages of our applications in different systems. We looked into windows, flatpak, snap and, personally, I looked into Android once again.

Nowadays, KDE developers develop the applications on their systems and then create the binaries on their systems as well. Usually it’s a team effort where possibly just one person in the team will be familiar with Android and have the development combo in place: Android SDK, Android NDK, Qt binaries and often several KDE Frameworks precompiled. Not fun and a fairly complex premise.

Let’s fix that.

Going back to the initial premise of delivering KDE Edu binaries, the first thing we need is a continuous distribution system for Android, much like we already had for binary-factory.kde.org.

Our applications will be bundling some KDE Frameworks, we need to know which work, to make sure they don’t break to some extent, much like what build.kde.org is already doing.

And last but not least, while at it, we need to create a system that makes it simple for developers to do the same that our automatic systems are doing.

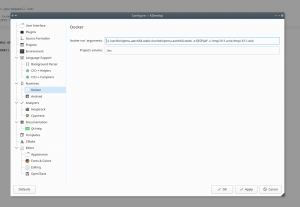

Docker to the rescue

We needed a way to pull everything we will need into a system: SDK, NDK, Qt binaries, few build dependencies. For this we created the following image:

https://hub.docker.com/r/kdeorg/android-sdk/ (Source)

With this image one can create binaries for his application locally.

We have also deployed it in build.kde.org to build some KDE Frameworks. Note that we are lacking some dependencies on Android so our offer is limited in comparison to traditional GNU/Linux. Also we are not running tests, it’s something we could look into but I’m not yet sure how useful that would be.

I want to develop

Here you have a wiki that explains how to use the docker image to build your KDE application.

I want to test

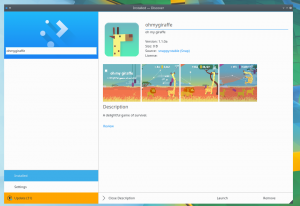

I set up an F-Droid repository that offers the binaries listed in the binary-factory conveniently, so that when there is a new version we will get the update.

Future

All of this ties directly to the runtime integration I mentioned already in this blog post.