In a product like Plasma, knowing the kind of things our existing users care about and use sheds light on what needs polishing or improving. At the moment, the input we have is either the one from the loudest most involved people or outright bug reports, which lead to a confirmation bias.

What do our users like about Plasma? On which hardware do people use Plasma? Are we testing Plasma on the same kind of hardware Plasma is being used for?

Some time ago, Volker Krause started up the KUserFeedback framework with two main features. First, allowing to send information about application’s usage depending on certain users’ preferences and include mechanisms to ask users for feedback explicitly. This has been deployed into several products already, like GammaRay and Qt Creator, but we never adopted it in KDE software.

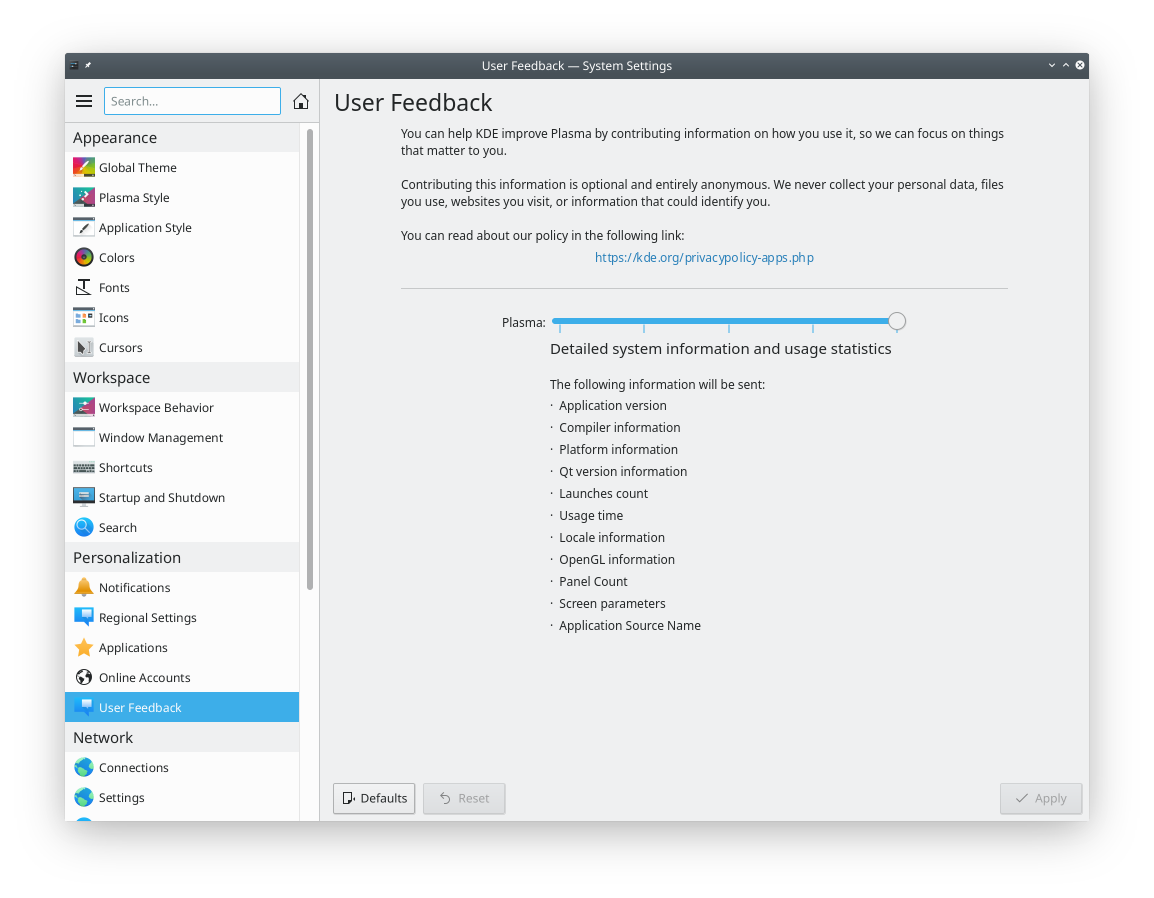

The first step has been to allow our users to tune how much information Plasma products should be telling KDE about the systems they run on.

This mechanism is only integrated into Plasma and Discover right now, but I’d like to extend this to others like System Settings and KWin in the future too.

Privacy

We very well understand how this is related to privacy. As you can see, we have been careful about only requesting information that is important for improving the software, and we are doing so while making sure this information is as unidentifiable and anonymous as possible.

In the end, I’d say we all want to see Free Software which is respectful of its users and that responds to people rather than the few of us working from a dark (or bright!) office.

In case you have any doubts, you can see KDE’s Applications Privacy Policy and specifically the Telemetry Policy.

Plasma 5.18

This will be coming in really soon in the next Plasma release early next February 2020. This is all opt-in, you will have to enable it. And please do so, let it be another way how you get to contribute to Free Software. 🙂

If you can’t find the module, please tell your distribution. The feature is very new and if the KUserFeedback framework isn’t present it won’t be built.